The year 2023 could be remembered as the time in world history when humans began to accede to a higher power of their own making — artificial intelligence. Supercomputing power, including generative AI that can make art and videos and write, is now at the fingertips of virtually anyone.

Yet, AI remains imperfect in many ways. How much faith should be put in this growing technology? Does it have blind spots?

With regard to one task, AI’s ability to determine an accurate news story from a fake (or not factually accurate) news story, a UW-Stout professor has put the power of AI to the test.

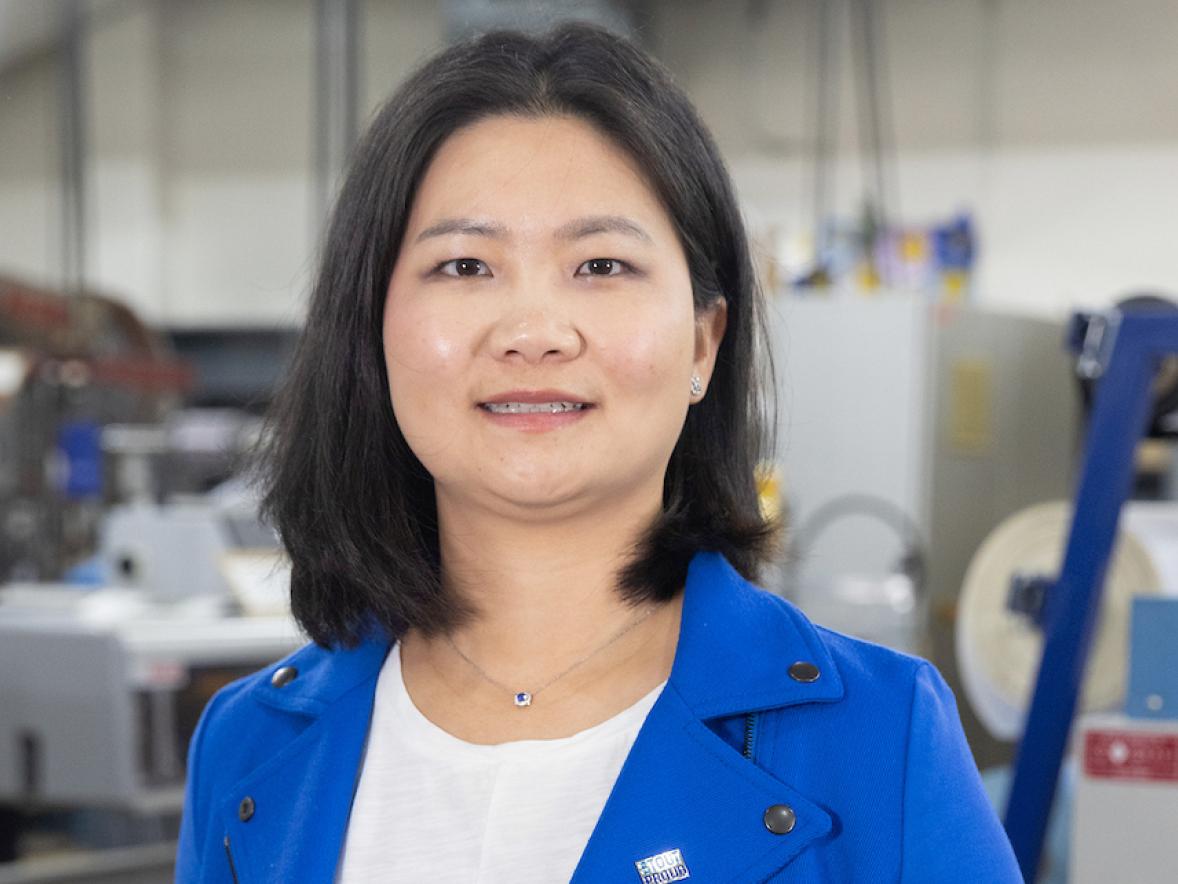

In November, at the Institute of Electrical and Electronics Engineers Future Networks World Forum in Baltimore, Assistant Professor Kevin Matthe Caramancion presented his research, “News Verifiers Showdown: A Comparative Performance Evaluation of ChatGPT 3.5, ChatGPT 4.0, Bing AI, and Bard in News Fact-Checking.”

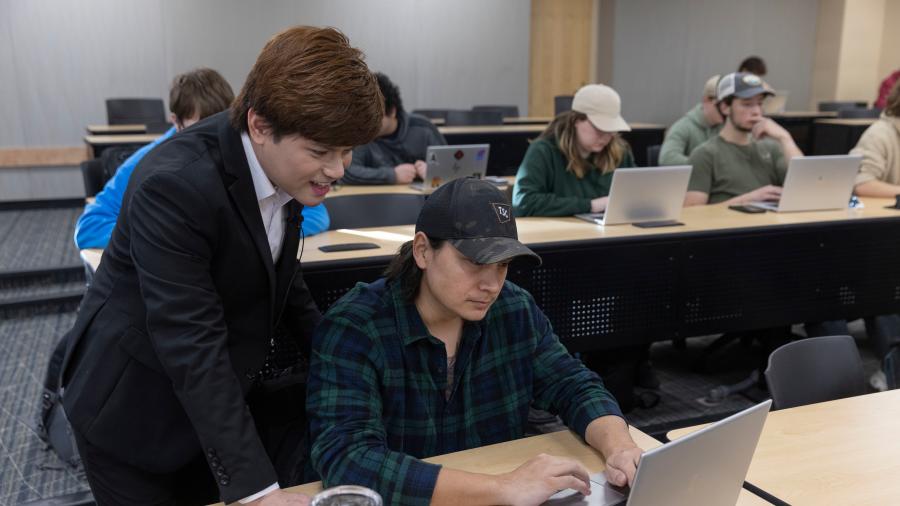

In other words, could AI fact-check a news story as well as a human? Caramancion, who teaches computer science, focused on using large language models for fake news detection. His results indicate that while AI is good at ferreting out fake news, an issue of high importance to voters as the U.S. enters a presidential election year, it shouldn’t yet be fully trusted over humans’ ability to fact-check.

Caramancion used the four AI systems to review 100 news stories that previously had been independently fact-checked. If an academic grade were assigned, AI would have received a D — averaging 65.25% accuracy at determining which stories were true, fake or somewhere in-between. “The results showed a moderate proficiency across all models,” Caramancion said.

OpenAI's Chat GPT 4.0 fared the best with a score of 71, “suggesting an edge in newer LLMs' abilities to differentiate fact from deception. However, when juxtaposed against the performance of human fact-checkers, the AI models, despite showing promise, lag in comprehending the subtleties and contexts inherent in news information,” Caramancion said.

“The findings highlight the potential of AI in the domain of fact-checking while underscoring the continued importance of human cognitive skills and the necessity for persistent advancements in AI capabilities,” he said.

“The findings highlight the potential of AI in the domain of fact-checking while underscoring the continued importance of human cognitive skills and the necessity for persistent advancements in AI capabilities,” he said.

His study was the first of its kind and takes a “a significant step forward in understanding how AI can be tailored and optimized for this critical task. It’s a pioneering contribution that opens up new avenues for both AI development and media studies, addressing a vital need in today’s information-rich society,” he added.

Another UW-Stout faculty member is leading the way on AI issues. Professor David Ding is serving on the Governor’s Task Force on Workforce and Artificial Intelligence. Ding also is director of UW-Stout’s Robert F. Cervenka School of Engineering and an associate dean. The task force will “recommend policy directions and investments related to workforce development and educational systems to capitalize on the AI transformation,” according to the state Department of Workforce Development.

The results of Caramancion’s study have been cited by industry and news organizations around the world.

Caramancion has a doctorate in information science and previously worked for IBM as a researcher and data analyst. Following is a Q&A about his research and AI:

What was the genesis of this research project?

It was primarily driven by the rising prominence of AI in various domains, particularly in fact-checking and news verification. With the proliferation of fake news and misinformation, it's critical to understand how well AI can discern the truth. While AI tools like ChatGPT, Bing AI, and Bard are increasingly being used for this purpose, there was a lack of comprehensive studies assessing their effectiveness.

My interest was not just in whether AI can perform this task but how it compares with human proficiency in understanding the nuances and context of news, which is often layered and complex.

How does AI detect fake news?

AI detects fake news through a combination of techniques. First, it evaluates the content against a vast database of known facts. AI models like ChatGPT are trained on a wide range of internet text, which allows them to have a baseline understanding of commonly accepted truths. Second, AI checks the reliability of sources. For instance, it assesses if the information comes from a recognized and credible news outlet or a known purveyor of misinformation.

However, AI does more than just label news as fake or real. It also provides a basis for its conclusions, citing inconsistencies or highlighting discrepancies with verified facts. This aspect is crucial because it adds a layer of transparency and understanding to AI's fact-checking process. It's not just about the verdict but also about understanding why a piece of news is considered false or misleading.

Why is AI struggling at times to detect fake news?

First, the creators of fake news are becoming increasingly sophisticated, using tactics that mimic legitimate news styles, making detection more challenging. They often mix truths with falsehoods, which can be hard for AI to disentangle. Second, AI's capability is limited by the data it has been trained on. If it hasn't encountered similar falsehoods before, it may fail to recognize them as fake. Additionally, understanding context and nuance is a significant challenge. AI can struggle with sarcasm, satire and complex narratives that humans navigate more intuitively.

Hence, while AI has made significant strides, it's not yet at a level where it can match the nuanced understanding of a skilled human fact-checker. The evolving nature of language and news also means AI models require continuous updates to stay effective.

What’s the time difference between AI’s ability to fact-check an article vs. a human’s?

While a human fact-checker might take hours to thoroughly research and verify a single article, AI can perform a similar level of analysis in a matter of minutes or even seconds. This speed is due to AI's ability to instantly access and process vast amounts of data. Many organizations use AI as a first line of defense, followed by human verification for more complex or borderline cases.

Do X (formerly Twitter), Meta (Facebook), and other major communications platforms use AI to fact-check news stories?

They are indeed utilizing AI to help identify and mitigate the spread of fake news. Other major communications platforms and social media sites are also adopting similar approaches. They often use a combination of automated systems and human oversight to maintain the integrity of the information on their platforms.

However, the effectiveness of these systems varies, and they are continuously being refined to adapt to the ever-evolving landscape of online misinformation.

Can anyone use AI to see if something they’ve read is fake?

Yes, individuals can certainly use AI to help determine the veracity of news they come across. One simple way is to use AI-powered search engines or browser extensions that offer fact-checking features. These tools often cross-reference the content with reliable databases and provide indications of accuracy or flags for potential misinformation.

Additionally, certain news aggregation platforms now incorporate AI-driven fact-checking functionalities. While these AI tools are not infallible, they can certainly assist in identifying news that is likely to be fake, offering a first layer of defense against misinformation.

Is it possible AI has already improved since your study was conducted?

This study was conducted from mid-May to mid-June 2023. Given the rapid pace of advancements in AI technology, it is indeed possible that there have been improvements in AI's capabilities since the conclusion of this research. AI models are continuously being updated with new data, improved algorithms, and enhanced learning capabilities. This is a crucial aspect to consider, as it suggests that the efficacy of AI in news verification is a moving target, constantly evolving.

My study provides a snapshot of the capabilities of these large language models at a specific point in time, and it's essential to continuously re-evaluate their performance to keep pace with technological advancements.

###